Memo Akten

Memo Akten is a multi-disciplinary computational artist who investigates the intersection between ecology, technology, science, and spirituality. With a passion for studying the intelligence of nature and machines, as well as the perception, consciousness, and neuroscience of human beings, Memo is a curious philomath with a broad range of interests. As an AI wrangler, he is skilled in crafting Speculative Simulations & Data Dramatizations that bring complex concepts to life. With a PhD in Creative Artificial Intelligence & Deep Learning, Memo has a deep understanding of how to imbue machine learning algorithms with Meaningful Human Control, ensuring that AI is created with ethical considerations in mind.

Currently, Memo serves as an Assistant Professor in the UCSD Visual Arts department, where he teaches courses in Computational Art, AI, XR, and CG. Through his work, Memo encourages his students to explore the boundaries of what is possible through technology, always pushing the boundaries of creativity and innovation.

Analog and Video Works

Art On-Chain

-

![]()

Layers of Perception: Meditation #1

-

![Layers of Perception: Meditation #2]()

Layers of Perception: Meditation #2

-

![]()

Layers of Perception: Meditation #3

-

![]()

BigGAN Study #2 - It's more fun to compute.mp4, 2018

-

![]()

BigGAN Study #4 - BigGAN Madness.mp4, 2018

-

![]()

We are all connected #04 - Underworld.mp4, 2020

-

![]()

We are all connected #05 - Mad World.mp4, 2020

-

![]()

We are all connected #06 - Plug me in.mp4, 2020

-

![]()

We are all connected #08 - Avril 14.mp4, 2020

-

![]()

Autopoeisic Transmogrification Fragment

-

![]()

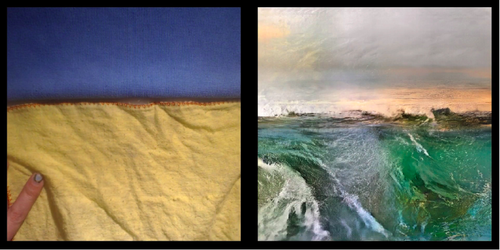

Learning To See: Gloomy Sunday #1 - Water

-

![]()

Learning To See: Gloomy Sunday #2 - Fire

-

![]()

Learning To See: Gloomy Sunday #3 - Air

-

![]()

Learning To See: Gloomy Sunday #4 - Earth

-

![]()

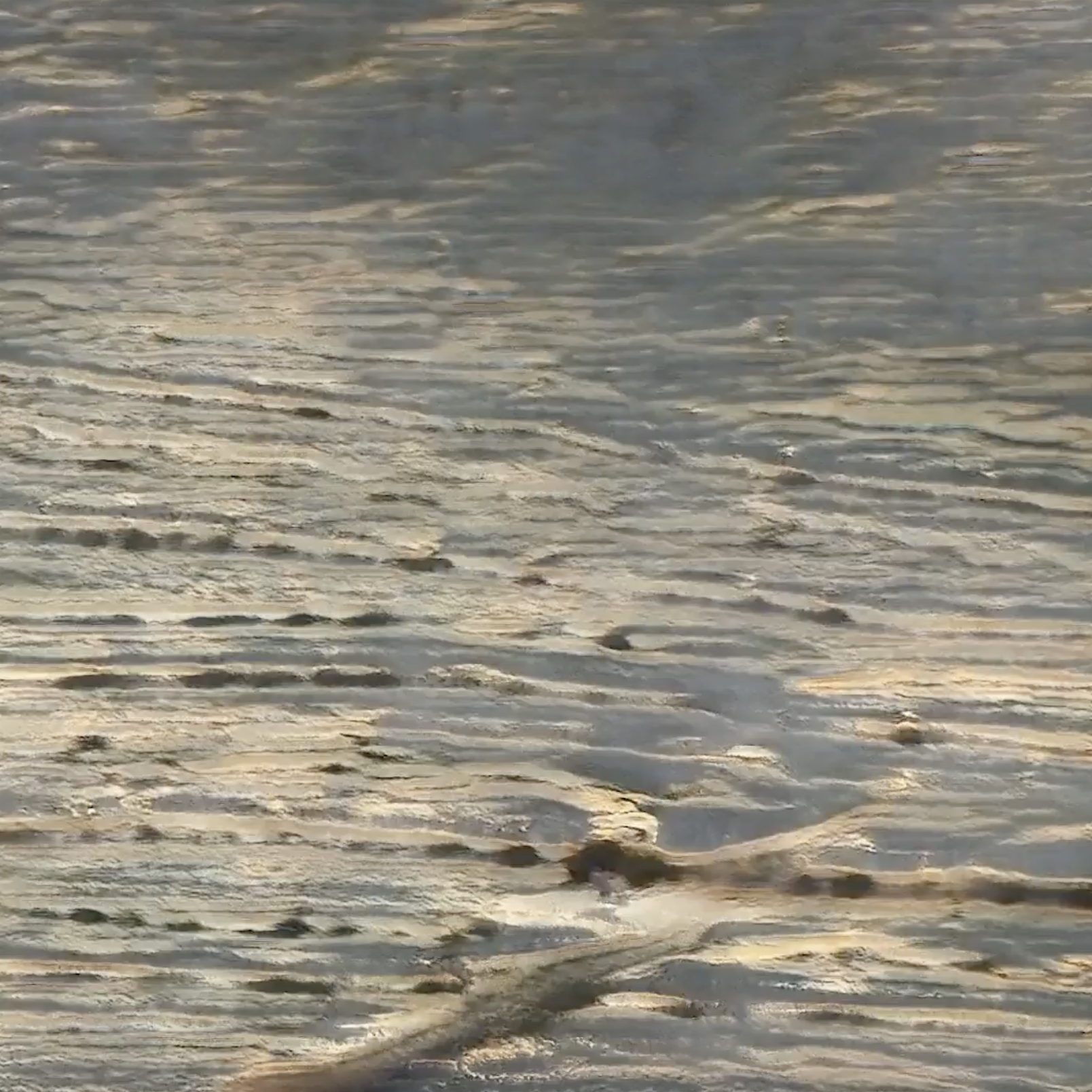

Learning To See: We are made of star dust #2

Articles

-

![]()

EARLY AI VIDEO WORKS BY MEMO AKTEN

Between 2018 and 2020, Memo Akten produced 2 early AI series: the "BigGAN Study" and "We Are All Connected", both representing his early explorations with GANs. Both series feature audio-reactive visual compositions that respond to original music composed by the artist himself without the use of AI. The work reflects the interconnectedness of all forms of life and matter, from microcosm to macrocosm.

-

![]()

MACHINE LEARNING ART: AN INTERVIEW WITH MEMO AKTEN

In this interview with Artnome (Jason Bailey), artist and researcher Memo Akten discusses his work with machine learning, his approach to art as a form of technological and societal reflection, and his concerns about authorship, bias, and perception in AI-generated art. Akten shares insights on his creative process, critiques of the term "AI art," and the ethical implications of emerging technologies.

-

![]()

ARTISTS CREATE CONNECTIONS BETWEEN THE WAY HUMANS DANCE AND NATURE MOVES IN ‘SUPERRADIANCE’

Memo Akten and Katie Peyton Hofstadter talk about their multimedia artwork at Birch Aquarium on Feb. 10, published in San Diego Union-Tribune. They’re among 30 artists whose work is featured in the “Embodied Pacific: Ocean Unseen” partnership connecting art and science.

Exhibitions

-

![]()

ART BASEL, UNTITLED ART FAIR, MIAMI, 2024

We presented a curated selection of pioneering AI and technology-driven artworks at the Untitled Miami Art Fair, emphasizing the historical development of AI in art.

-

![]()

AUTOMAT UND MENSCH 2.0, ZÜRICH, 2024

As we revisited the Automat und Mensch show, our aim was to reflect upon the evolution of AI in art and generative art over the past half-decade.

-

![]()

BOUNDARIES, VENICE BIENNALE, 2024

Boundaries (2024) by Memo Akten, which explores the connections between self and universe, was exhibited at Chiesa di Santa Maria della Visitazione as part of Venice Biennale.

-

![]()

NOISE MEDIA ART FAIR, ISTANBUL, 2024

At the NOISE Media Art Fair, the gallery presented mixed media artworks by local and international artists like Memo Akten, Emre Meydan, David Young, Mario Klingemann, and Kevin Abosch.

-

![]()

FREAK SHOW, ZUG, 2023

There is a long history of “Freak Shows” which dates back to medieval times, where people considered physically unusual were put on display like caged animals.

-

![]()

THE BEAUTY OF EARLY LIFE, ZKM, 2022

Deep Meditations: Abiogenesis focuses on the emergence of life, and the evolution of single and multicellular organisms. The work was exhibited at The Beauty of Early Life at ZKM in 2022.

-

![]()

AUTOMAT UND MENSCH, ZÜRICH, 2019

The Automat und Mensch exhibition is, above all, an opportunity to put important work by generative artists spanning the last 70 years into context by showing it in a single location.